So far, we've assumed a constant frame rate.

If our animation is defined by an easing curve, we can look up its value at any point along the way.

It seems at first, variable frame rates are trivial: we can evaluate the curve at arbitrary times instead of pre-set intervals.

If we take forward differences to measure slope, we still get a smooth velocity curve. We can accumulate—integrate—these differences back into position as long as we account for a variable time step $ Δt_i $. It seems our physics engine should be unbothered too. But there's a few problems.

First, if we implemented inertial scrolling like we did before, multiplying the velocity by $ 1 - \class{royal}{f} $ every frame, we'd get the wrong curve. The amount of velocity lost per frame should now vary, we can no longer treat it as a convenient constant.

If we do the math, we can find an expression for the correct amount of friction $ \class{purple}{f_i} $ per frame for a given step $ Δt_i $, relative to the default $ \class{royal}{f} $ and $ Δt $. Not pretty, and this is just one object experiencing one force. In more elaborate scenarios, finding exact expressions for positions or velocities can be hard or even impossible. This is what the physics engine is supposed to be doing for us.

There's another problem. If we integrate these curve segments to get position, we get an exponential curve, just as before. Did we achieve frame rate independence?

Well, no. If we change the time steps and run the algorithm again, it looks the same. However, the new curve and old curve don't match up. The difference is surprisingly large, as this animation is only half a second long and the average frame rate is identical in both cases. Such errors will compound the longer it runs, and make your program unpredictable.

Luckily we can have our cake and eat it too. We can achieve consistent physics and still render at arbitrary frame rates. We just have to decouple the physics clock from the rendering clock.

Whenever we have to render a new frame, we compare both clocks. If the render clock has advanced past the physics clock, we do one or more simulation steps to catch up. Then we interpolate linearly between the last two values until we run out of physics again.

This means the visuals are delayed by one physics frame, but this is usually acceptable. We can even run our physics at half the frame rate or less to conserve power. Though more error will creep in, this error will be identical between all runs, and we can manually compensate for it if needed.

When we implement variable frame rates correctly, we can produce an arbitrary number of frames at arbitrary times. This buys us something very important, not for the end-user, but for the developer: the ability to skip to any point in time, or at least fast-forward as quickly as your computer can manage.

But just because the simulation is consistent, doesn't mean it's correct or even stable. Euler integration fits our intuitive model of how pieces add up, but it's actually quite terrible. For example, if we made our bouncing apple perfectly elastic in the physical sense—losing no energy at all—and apply Euler, it would start bouncing irregularly, gaining height.

Which means the first bounce simulation wasn't using Euler at all. It couldn't have: the energy wouldn't have been conserved. All the finite differentiation and integration magic that followed only worked neatly because the position data was of a higher quality to begin with. We have to find the source of this phantom energy so we can correct for it, creating the Verlet integration that was used.

We're trying to simulate this path, the ideal curve we'd get if we could integrate with infinitely small steps. We imagine we start at the point in the middle, and would like to step forward by a large amount. The time step is exactly 1 second, so we can visually add accelerations and velocities like vectors, without having to scale them. Note that this is not a gravity arc, the downward force now varies.

Earlier, I said that if we used forward differences, we could get the velocity between two points. And that we could make a reconstruction of position from forward velocity by applying 'Euler integration'. While that's true, that's not actually what Euler integration is.

See, this is a chicken and egg problem. This velocity isn't the slope at the start or the end or even the middle. It's the average velocity over the entire time step. We can't get this velocity without knowing the future position, and we can't get there without knowing the average velocity in the first place.

The velocity that we're actually tracking is for the point itself, at the start of the frame. Any force or acceleration is calculated based on that single instant. If we integrate, we move forward along the curve's tangent, not the curve itself. This is where the extra height comes from, and thus, phantom gravitational energy.

For any finite step, there will always be some overshooting, because we don't yet know what happens along the way. Euler actually made the same mistake we made earlier: he used a central difference where a forward one was required, because the forward difference can only be gotten after the fact. The 'central difference' here is the actual velocity at a point, the true derivative.

As the acceleration changes in this particular scenario, we could try applying Euler, and then averaging the start and end velocities to get something in the middle. It fails, because the end velocity itself is totally wrong. Though we get closer than Euler did, we now undershoot by half the previous amount.

To resolve the chicken and egg, we need to look to the past. We assume that rather than starting with one position, we start with two known good frames, defined by us. That means we can take a backwards difference and now know the average velocity of the previous frame. How does this help?

Well, we assume that this velocity happens to be equal or close to the velocity at the halfway point. We also still assume the acceleration is constant for the entire duration. If we then integrate from here to the next halfway point, something magical happens.

We get a perfect prediction for the next frame's average velocity, the forward difference. By always remembering the previous position, we can repeat this indefinitely. That this works at all is amazing: we're applying the exact same operation as before—constant acceleration—for the same amount of time. On just a slightly different concept of velocity. Without even knowing exactly when the object reaches that velocity. That's Verlet integration.

Euler integration failed on a simple constant acceleration like gravity and can only accurately replicate a linear ease $ f $. This motion is a cubic ease $ f^3 $, with linear acceleration that decreases. Verlet still nails it, even when leaping seconds at a time. Why does this work?

Euler integration applies a constant acceleration ahead of a point. If there's any decrease in acceleration, it overestimates by a significant amount. That's on top of stepping in the wrong direction to begin with. Both position and velocity will instantly begin to drift away from their true values.

Verlet integration applies the same constant acceleration around a point. If the acceleration is a perfect line, the error cancels out: the two triangles make up an equal positive and negative area. By starting with a known good initial velocity and cancelling out subsequent errors, we can precisely track velocity through a linear force. If we simplify the formula, velocity even disappears: we can work with positions and acceleration directly.

As this captures the slope of acceleration, we only get errors if the acceleration curves. In this case, the left and right areas don't cancel out exactly. The missing area however smoothly approaches 0 as the time step shrinks, a further sign of Verlet's error-defeating properties. If we do the math, we find the position has $ O(Δt^2) $ global error: decrease the time step $ Δt $ by a factor of 10, and it becomes 100× more accurate. Not bad.

For completeness, here's the 4th order Runge-Kutta method (RK4), which is a sophisticated modification of Euler integration. It involves taking full and half-frame steps and backtracking. It finds 4 estimates for the velocity based on the acceleration at the start, middle and end.

The physics can then be integrated from a weighted sum of these estimates, with coefficients $ [\frac{1}{6}, \frac{2}{6}, \frac{2}{6}, \frac{1}{6}] $. We end up in the right place, at the right speed. This method offers an $ O(Δt^4) $ global error. Decrease the time step 10× and it becomes 10,000× more accurate. We have a choice of easy-and-good-enough (Verlet) or complicated-but-precise (RK4), at any frame rate. Each has its own perks, but Verlet is most attractive for games.

With physics under our belt, let's move on. Why not animate time itself? This is the variable speed clock and it's dead simple. It's also a great debugging tool: sync all your animations to a global clock and you can activate bullet time at will. You can tell right away if a glitch was an animation bug or a performance hiccup. On this site too: if you hold Shift, everything slows down 5×.

First, we differentiate the clock's time backwards—because in real-time applications, we don't know what the future holds. This is time's velocity $ \class{green}{v_{←i}} $. As we have to divide by the time step too, the velocity is constant and equal to 1. Let's change that.

We can reduce the speed of time at will, by changing $ \class{green}{v_i} $. If we then multiply by the time step $ Δt_i $ again and add the pieces back together incrementally, we get a new clock $ t'_i $. By integrating this way, we only need to worry about slope, not position: time always advances consistently. This is also where variable frame rates pay off: going half the speed is the same job as rendering at twice the frame rate.

Using our other tricks, we can animate $ \class{green}{v_i} $ smoothly, easing in and out of slow motion, or speeding into fast-forward. If we didn't do this, then any animation cued off this clock would jerk at the transition point. This is the chain rule for derivatives in action: derivatives compound when you compose functions. Any jerks caused along the way will be visible in the end result.

If time is smooth, what about interruptions? Suppose we have a cosine eased animation. After half a second, the user interrupts and triggers a new animation. If we abort the animation and start a new one, we create a huge jerk. The object stops instantly and then slowly starts moving again.

One way to solve this is to layer on another animation: one that blends between the two easing curves in the middle. Here it's just another cosine ease, interpolating in the vertical direction, between two changing values. We blend across the entire animation for maximum smoothness. This has a downside though: if the blended animation itself is interrupted, we'd have to layer on another blend, one for each additional interruption. That's too much bookkeeping, particularly when using long animations.

We can fix this by mimicking inertial scrolling. We treat everything that came before as a black box, and assume nothing happens afterwards. We only look at one thing: velocity at the time of interruption.

After determining the velocity of any running animations, we can construct a ramp to match. We start from 0 to create a relative animation.

We can bend this ramp back to zero with another cosine ease, interpolating vertically. This time however, the first easing curve is no longer involved.

If we then add this to the second animation, it perfectly fills the gap at the corner. We only need to track two animations at a time: the currently active one, and a corrective bend. If we get interrupted again, we measure the combined velocity, and construct a new bend that lets us forget everything that came before.

By using a different easing curve for the correction, we can make it tighter, creating a slight wave at the end. Either way, it doesn't matter how the object was moving before, it will always recover correctly.

But what if we get interrupted all the time? We could be tracking a moving pointer, following a changing audio volume, or just have a fidgety user in the chair. We'd like to smooth out this data. The interrupted easing approach would be constantly missing its target, because there is never time for the value to settle. There is an easier way.

We use an exponential decay, just like with inertial scrolling. Only now we manipulate the position $ p_{i} $ directly: we move it a certain constant fraction towards the target $ \class{purple}{o_{i}} $, chasing it. Here, $ \class{royal}{f} = 0.1 = 10\% $. This is a one-line feedback system that will keep trying to reach its target, no matter how or when it changes. When the target is constant, the position follows an exponential arc up or down.

The entire path is continuous, but not smooth. That's fixable: we can apply exponential decay again. This creates two linked pairs, each chasing the next, from $ \class{slate}{q_{i}} $ to $ \class{blue}{p_{i}} $ to $ \class{purple}{o_{i}} $. Each level appears to do something akin to integration: it smooths out discontinuities, one derivative at a time. Where a curve crosses its parent, it has a local maximum or minimum. These are signs that calculus is hiding somewhere.

That's not so surprising when you know these are difference equations: they describe a relation between a quantity and how it's changing from one to step to the next. These are the finite versions of differential equations from calculus. They can describe sophisticated behavior with remarkably few operations. Here I added a third feedback layer. The path gets smoother, but also lags more behind the target.

If we increase $ f $ to 0.25, the curves respond more quickly. Exponential decays are directly tuneable, and great for whiplash-like motions. The more levels, the more inertia, and the longer it takes to turn.

We can also pick a different $ f_i $ for each stage. Remarkably, the order of the $ \class{royal}{f_i} $ values doesn't matter: 0.1, 0.2, 0.3 has the exact same result as 0.3, 0.2, 0.1. That's because these filters are all linear, time-invariant systems, which have some very interesting properties.

If you shift or scale up/down a particular input signal, you'll get the exact same output back, just shifted and scaled in the same way. Even if you shift by less than a frame. We've created filters which manipulate the frequencies of signals directly. These are 1/2/3-pole low-pass filters that only allow slow changes. That's why this picture looks exactly like sampling continuous curves: the continuous and discrete are connected.

Exponential decays retain all their useful properties in 2D and 3D too. Unlike splines such as Bezier curves, they require no set up or garbage collection: just one variable per coordinate per level, no matter how long it runs. It works equally well for adding a tiny bit of mouse smoothing, or for creating grand, sweeping arcs. You can also use it to smooth existing curves, for example after randomly distorting them.

However there's one area where decay is constantly used where it really shouldn't be: download meters and load gauges. Suppose we start downloading a file. The speed is relatively constant, but noisy. After 1 second, it drops by 50%. This isn't all that uncommon. Many internet connections are traffic shaped, allowing short initial bursts to help with video streaming for example.

Often developers apply slow exponential easing to try and get a stable reading. As you need to smooth quite a lot to get rid of all the noise, you end up with a long decaying tail. This gives a completely wrong impression, making it seem like the speed is still dropping, when it's actually been steady for several seconds. The same shape appears in Unix load meters: it's a lie.

If we apply double exponential easing, we can increase $ f $ to get a shorter tail for the same amount of smoothing. But we can't get rid of it entirely: the more levels of easing we add, the more the curve starts to lag behind the data. We can do much better.

We can analyze the filters by examining their response to a standard input. If we pass in a single step from 0 to 1, we get the step response for the two filters.

Another good test pattern is a single one frame pulse. This is the impulse response for both filters. The impulse responses go on forever, decaying to 0, but never reaching it. This shows these filters effectively compute a weighted average of every single value they've ever seen before: they have a memory, an infinite impulse response (IIR).

Doesn't this look somewhat familiar? It turns out, the step response is the integral of the impulse response. It's a position. Vice versa, the impulse response is the derivative of the step response. It's a velocity. Surprise, physics!

But it gets weirder. Integration sums together all values starting from a certain point, multiplied by the (constant) time step. That means that integration is itself a filter: its impulse response is a single step, the integral of an impulse. Its step response is a ramp, a constant increase.

It works the other way too. Differentiation takes the difference of neighbouring values. It's a filter and its step response is just an impulse, detecting the single change in the step. Its impulse response is an upward impulse followed by a downward one: the derivative of an impulse. When one value is weighed positively and the other weighed negatively, the sum is their difference.

This explains why exponential filters seem to have integration-like qualities: these are all integrators, they just apply different weights to the values they add up. Every step response is another filter's impulse response, and vice versa, connected through integration and differentiation. We can use this to design filters to spec.

That said, filter design is still an art. IIR filters are feedback systems: once a value enters, it never leaves, bouncing around forever. Controlling it precisely is difficult under real world conditions, with finite arithmetic and noisy measurements to deal with. Much simpler is the finite impulse response (FIR), where each value only affects the output for a limited time. Here I use one lobe of a sine wave over 4 seconds.

Even if we don't know how to build the filter, we can still analyze it. We can integrate the impulse response to get the step response. But there's a problem: it overshoots, and not by a little. Ideally the filtered signal should settle at the original height. The problem is that the area under the green curve does not add up to 1.

To fix this, we divide the impulse response by the area it spans, $ \class{green}{\frac{8}{π}} $, or multiply by $ \class{green}{\frac{π}{8}} $, normalizing it to 1. Such filters are said to have unit DC gain, revealing their ancestry in analog electronics. The step response turns out to be a cosine curve, and this filter must therefore act like perpetually interruptible cosine easing.

There's two ways of interpreting the step response. One is that we pushed a step through the filter. Another is that we pushed the filter through a step—an integrator. This symmetry is a property of convolution, which is the integration-like operator we've been secretly using all along.

Convolution is easiest to understand in motion. When you convolve two curves $ \class{purple}{q_i} ⊗ \class{green}{r_i} $, you slide them past each other, after mirroring one of them. As our impulse response is symmetrical, we can ignore that last part for now.

We multiply both curves with each other, creating a new curve in the overlap: here a growing section of the impulse response. The area under this curve is the output of the filter at that time. The sum goes to infinity in both directions, allowing for infinite tails. We already saw something similar when we used a geometric series to determine the final resting position of an inertial scroll gesture. With a FIR filter, the sum ends.

But why did we have to mirror one curve? It's simple: from the impulse response's point of view, new values approach from the positive X side, now left, not the negative X side, right. By flipping the impulse response, it faces the other signal, which is what we want.

If we center the view on the impulse response, it's clear we've swapped the role of the two curves. Now it's the step that's passing backwards through the filter, rather than the other way around.

If we replace the step response with a random burst of signal, the filter can work its magic, smoothing out the input through convolution. It's a weighted average with a sliding window. The filter still lags behind the data, but the tail is now finite.

If we make the window narrower, its amplitude increases due to the normalization. We get a more variable curve, but also a shorter tail. This is like a blur filter in Photoshop, only in 1D instead of 2D. As Photoshop has the entire image at its disposal, rather than processing a real-time signal, it doesn't have to worry about lag: it can compensate directly by shifting the result back a constant distance when it's done.

What about custom filter design? Well, if you're an engineer, that's a topic for advanced study, learning to control the level and phase at exact frequencies. If you're an animator, it's much simpler: you pick a desired easing curve, and use its velocity to make a normalized filter. You end up with the exact same step response, turning the easing curve into a perpetually interruptible animation.

Which leads to the last trick in this chapter: removing lag on a real-time filtered signal. There's always an inherent delay in any filter, where signals are shifted by roughly half the window length. We can't get rid of it, only reduce it. We have to change the filter to prefer certain frequencies over others, making it resonate to the kind of signal we expect. We use an easing curve that overshoots, and preferably a short one. This is just one I made up.

The velocity—here scaled down—now has a positive and negative part. As neither part is normalized by itself, the filter will first amplify any signal it encounters. The second part then compensates by pulling the level back down.

The result is that the filter actually tries to predict the signal, which you can imagine is a useful thing to do. At certain points, the lag is close to 0, when the resonance frequency matches and slides into phase. When applied to animation, resonant filters can create jelly-like motions. When applied to electronic music at about 220 Hz, you get Acid House.

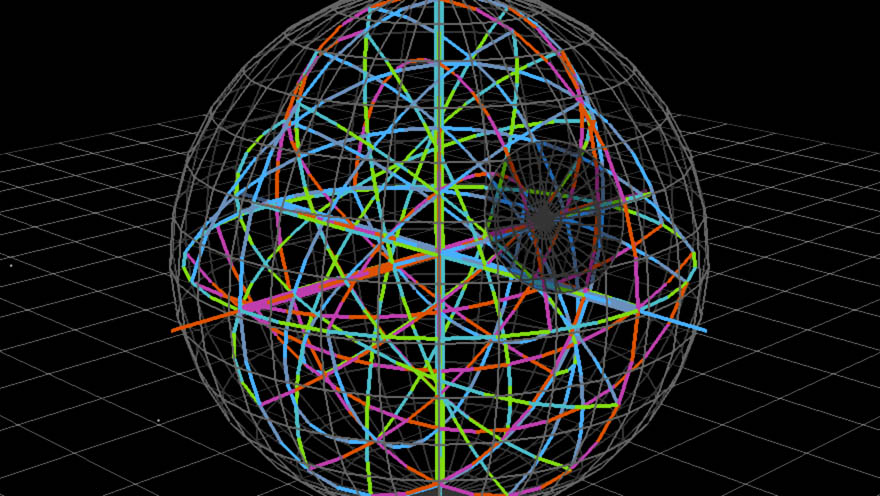

Let's put it all together, just for fun. Here we have some particles being simulated with Verlet integration. Each particle experiences three forces. Radial containment pushes them to within a certain distance of the target. Friction slows them down, opposing the direction of motion. A constantly rotating force, different for each particle, keeps them from bunching up. The target follows the mouse, with double exponential easing.

Friction links acceleration to velocity. Containment links acceleration to position. And integration links them back the other way. These circular dependencies are not a problem for a good physics engine. Note that the particles do not interact, they just happen to follow similar rules.

Tip: Move the mouse and hold Shift to see variable frame rate physics in action.

If we add up the three forces and trace out curves again, we can watch the particles—and their derivatives—speed through time. Just like you are doing right now, in your chair. As velocity and acceleration only update in steps, their curves will only be smooth if the physics clock and rendering clock are synced.