A DOM for Robots

Modelling Live Data

I want to render live 3D graphics based on a declarative data model. That means a choice of shapes and transforms, as well as data sources and formats. I also want to combine them and make live changes. Which sounds kind of DOMmy.

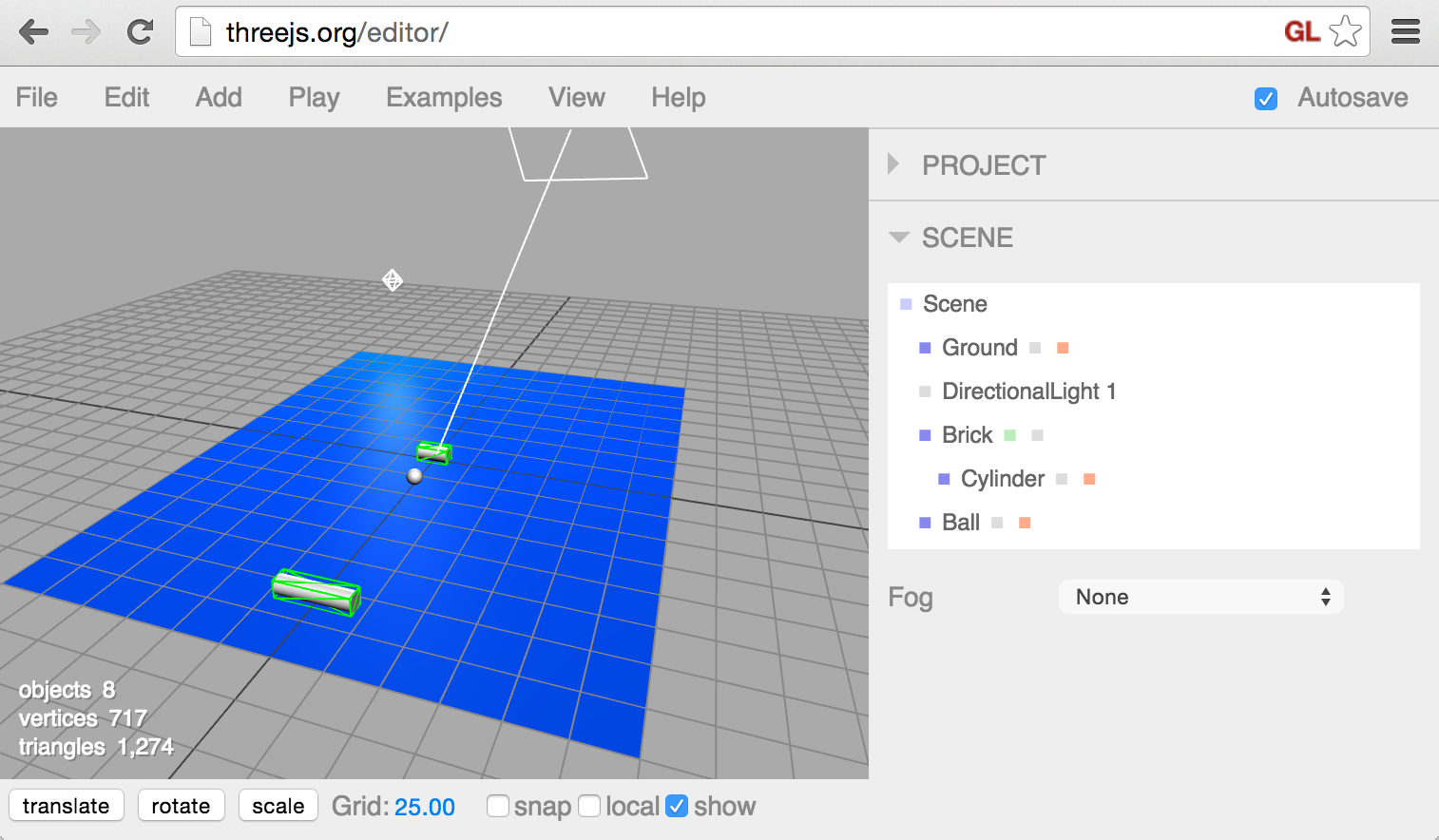

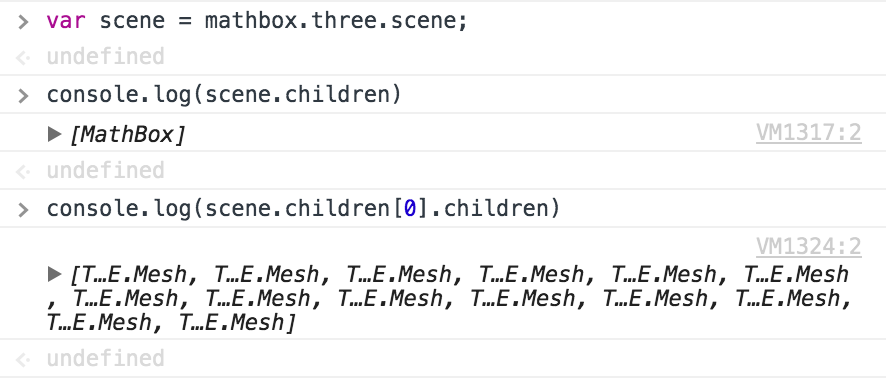

3D engines don't have Document Object Models though, they have scene graphs and render trees: minimal data structures optimized for rendering output. In Three.js, each tree node is a JS object with properties and children like itself. Composition only exists in a limited form, with a parent's matrix and visibility combining with that of its children. There is no fancy data binding: the renderer loops over the visible tree leaves every frame, passing in values directly to GL calls. Any geometry is uploaded once to GPU memory and cached. If you put in new parameters or data, it will be used to produce the next frame automatically, aside from a needsUpdate bit here and there for performance reasons.

So Three.js is a thin retained mode layer on top of an immediate mode API. It makes it trivial to draw the same thing over and over again in various configurations. That won't do, I want to draw dynamic things with the same ease. I need a richer model, which means wrapping another retained mode layer around it. That could mean observables, data binding, tree diffing, immutable data, and all the other fun stuff nobody can agree on.

However I mostly feed data in and many parameters will end up as shader properties. These are passed to Three as a dictionary of { type: '…', value: x } objects, each holding a single parameter. Any code that holds a reference to the dictionary will see the same value, as such it acts as a register: you can share it, transparently binding one value to N shaders. This way a single .set('color', 'blue') call on the fringes can instantly affect data structures deep inside the WebGLRenderer, without actually cascading through.

I applied this to build a view tree which retains this property, storing all attributes as shareable registers. The Three.js scene graph is reduced to a single layer of THREE.Mesh objects, flattening the hierarchy. Rather than clumsy CSS3D divs which encode matrices as strings, there's binary arrays, GLSL shaders, and highly optimizable JS lambdas.

As long as you don't go overboard with the numbers, it runs fine even on mobile.

<root id="1" scale={600} focus={3}>

<camera id="2" proxy={true} position={[0, 0, 3]} />

<shader id="3" code="

uniform float time;

uniform float intensity;

vec4 warpVertex(vec4 xyzw, inout vec4 stpq) {

xyzw += 0.2 * intensity * (sin(xyzw.yzwx * 1.91 + time + sin(xyzw.wxyz * 1.74 + time)));

xyzw += 0.1 * intensity * (sin(xyzw.yzwx * 4.03 + time + sin(xyzw.wxyz * 2.74 + time)));

xyzw += 0.05 * intensity * (sin(xyzw.yzwx * 8.39 + time + sin(xyzw.wxyz * 4.18 + time)));

xyzw += 0.025 * intensity * (sin(xyzw.yzwx * 15.1 + time + sin(xyzw.wxyz * 9.18 + time)));

return xyzw;

}"

time=>{(t) => t / 4} intensity=>{(t) => {

t = t / 4;

intensity = .5 + .5 * Math.cos(t / 3);

intensity = 1.0 - Math.pow(intensity, 4);

return intensity * 2.5;

}} />

<reveal id="4" stagger={[10, 0, 0, 0]} enter=>{(t) => 1.0 - Math.pow(1.0 - Math.min(1, (1 + pingpong(t))*2), 2)} exit=>{(t) => 1.0 - Math.pow(1.0 - Math.min(1, (1 - pingpong(t))*2), 2)}>

<vertex id="5" pass="view">

<polar id="6" bend={1/4} range={[[-π, π], [0, 1], [-1, 1]]} scale={[2, 1, 1]}>

<transform id="7" position={[0, 1/2, 0]}>

<axis id="8" detail={512} />

<scale id="9" divide={10} unit={π} base={2} />

<ticks id="10" width={3} classes=["foo", "bar"] />

<scale id="11" divide={5} unit={π} base={2} />

<format id="12" expr={(x) => {

return x ? (x / π).toPrecision(2) + 'π' : 0

}} />

<label id="13" depth={1/2} zIndex={1} />

</transform>

<axis id="14" axis={2} detail={128} crossed={true} />

<transform id="15" position={[π/2, 0, 0]}>

<axis id="16" axis={2} detail={128} crossed={true} />

</transform>

<transform id="17" position={[-π/2, 0, 0]}>

<axis id="18" axis={2} detail={128} crossed={true} />

</transform>

<grid id="19" divideX={40} detailX={512} divideY={20} detailY={128} width={1} opacity={1/2} unitX={π} baseX={2} zBias={-5} />

<interval id="20" width={512} expr={(emit, x, i, t) => {

emit(x, .5 + .25 * Math.sin(x + t) + .25 * Math.sin(x * 1.91 + t * 1.81));

}} channels={2} />

<line id="21" width={5} />

<play id="22" pace={10} loop={true} to={3} script=[[{color: "rgb(48, 144, 255)"}], [{color: "rgb(100, 180, 60)"}], [{color: "rgb(240, 20, 40)"}], [{color: "rgb(48, 144, 255)"}]] />

</polar>

</vertex>

</reveal>

</root>Note: The JSX is a lie, you define nodes in pure JS.

Keep it Simple

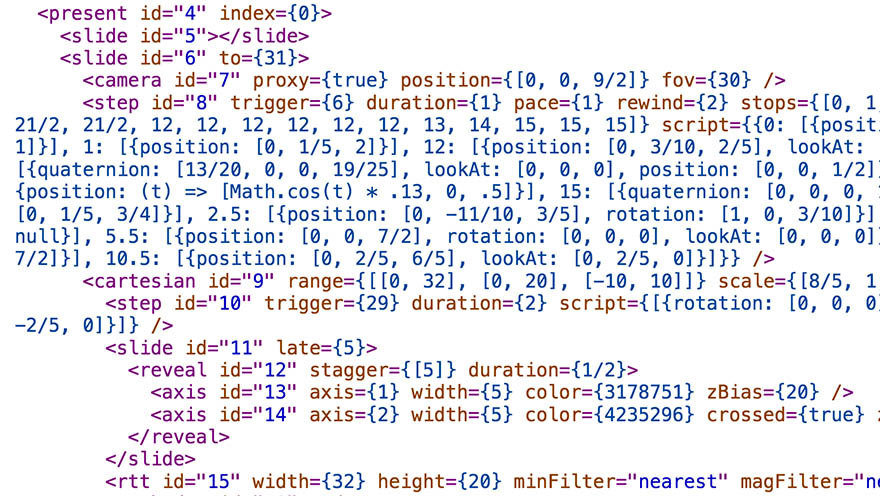

From afar there's a tree of nodes, similar to SVG tags. This is the MathBox library of vector primitives. The basic shapes are all there: points, lines, faces, vectors, surfaces, etc. These nodes are placed inside a shallow hierarchy of views and transforms.

However none of the shapes draw anything by themselves. They only know how to draw data supplied by a linked source. Data can be an array (static or live), a procedural source, custom JS / GLSL code, etc. This is further augmented by data operators which can be sandwiched between source and shape, forming automatic pipelines between siblings.

The current set of components looks like this:

Base

- Group

- Inherit

- Root

- Unit

Camera

- Camera

Draw

- Axis

- Face

- Grid

- Line

- Point

- Strip

- Surface

- Ticks

- Vector

Data

- Area

- Array

- Interval

- Matrix

- Scale

- Volume

- Voxel

Operator

- Grow

- Join

- Lerp

- Memo

- Resample

- Repeat

- Slice

- Split

- Spread

- Swizzle

- Transpose

Overlay

- DOM

- HTML

Present

- Move

- Play

- Present

- Reveal

- Slide

- Step

RTT

- Compose

- RTT

Shader

- Shader

Text

- Format

- Label

- Text

- Retext

Time

- Clock

- Now

Transform

- Fragment

- Layer

- Transform

- Transform4

- Vertex

View

- Cartesian

- Cartesian4

- Polar

- Spherical

- Stereographic

- Stereographic4

- View

To make you feel at home, nodes have an id and classes, and you can use CSS selectors to identify them. Nodes link up with preceding siblings and parents by default, but you can select any node in the tree. This allows for arbitrary graphs, including feedback loops. However all of this is optional: you can also pass in direct node objects or MathBox's own jQuery-like selections. What it doesn't have is a notion of detached document fragments: nodes are immediately inserted on creation.

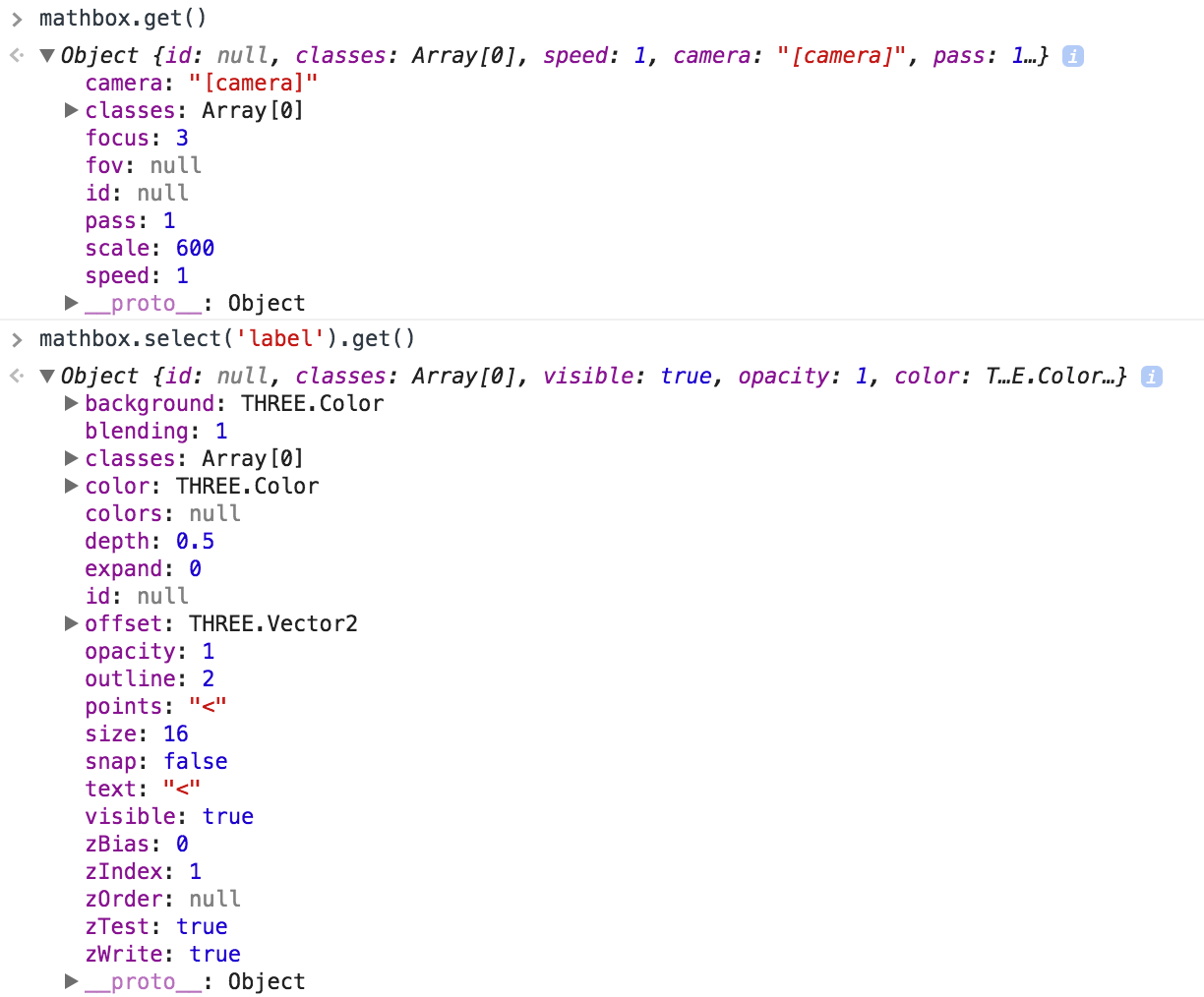

A node's attributes can be .get() and .set(), though there is also a read-only .props dictionary for fashionable reasons. The values are strongly typed as Three.js colors, vectors, matrices, … but accept e.g. CSS colors and ordinary arrays too. The values are normalized for immediate use, the original values are preserved on the side for printing and serialization.

What's unique is the emphasis on time. First, properties can be directly bound to time-dependent expressions, on creation or afterwards. Second, clocks are primitives on their own. This allows for nested timelines, on-demand bullet time, fast forwards and more. It even supports limited time travel, evaluating an expression several frames in the past. This can be used to ensure consistent 60 fps data logging through janky updates, useful for all sorts of things. It's exposed publicly as .bind(key, expr) and .evaluate(key, time) per node. It's also dogfood for declarative animation tracks. The primitives clock/now provide timing, while step and play handle keyframes on tracks.

This is definitely a DOM, but it has only basic features in common with the HTML DOM and does much less. Most of the magic comes from the components themselves. There's no cascade of styles to inherit. Children compose with a parent, they do not inherit from it, only caring about their own attributes. The namespace is clean, with no weird combo styles à la CSS. As much as possible, attributes are unique orthogonal knobs you can turn freely.

Model-View-Projection

On the inside I separate the generic data model from the type-specific View Controller attached to it. The controller's job is to create and manage Three.js objects to display the node (if any). Because a data source and a visible shape have very little in common, the nodes and their controllers are blank slates built and organized around named traits. Each trait is a data mix-in, with associated attributes and helpers for common behavior. Primitives with the same traits can be expected to work the same, as their public facing models are identical.

Controllers can traverse the graph to find each other by matching traits, listening for events and making calls in response. This way only specific events will cascade through cause and effect, often skipping large parts of the hierarchy. The only way to do a "global style recalculation" would be to send a forced change event to every single controller, and there's never a reason to do so.

The controller lifecycle is deliberately kept simple: make(), made(), change(…), unmake(), unmade(). When a model changes, its controller either updates in place, or rebuilds itself, doing an unmake/make cycle. The change handler is invoked on creation as well, to encourage stateless updates. It affords live editing of anything, without having to micro-optimize every possible change scenario. Controllers can also watch bound selectors, retargeting if their matched set changes. This lets primitives link up with elements that have yet to be inserted.

Unlike HTML, the DOM is not forced to contain a render tree as well. Only some of the leaf nodes have styles and create renderables. Siblings and parents are called upon to help, but the effects don't have to be strictly hierarchical. For example, a visual effect can wrap a single leaf but still be applied after all its parents, as transformations are collected and composed in passes.

It'll Do

The result is not so much a document model as it is a computational model inside a presentational model. You can feed it finalized data and draw it directly… or you can build new models within it and reproject them live. Memoization enables feedback and meta-visualization. The line between data viz and demo scene is rarely this blurry.

Here, the notion of a computed style has little meaning. Any value will end up being transformed and processed in arbitrary ways down the pipe. As I've tried to explain before, the kinds of things people do with getComputedStyle() and getClientBoundingRect() are better achieved by having an extensible layout model, one that affords custom constraints and composition on an equal footing. To do otherwise is to admit defeat and embrace a leaky abstraction by design.

The shallow hierarchy with composition between siblings is particularly appealing to me, even if I realize it introduces non-traditional semantics more reminiscent of a command-line. It acts as both a jQuery-style chainable API, and a minimal document model. If it offends your sensibilities, you could always defuse the magic by explicitly wiring up every relationship. In case of confusion, .inspect() will log syntax highlighted JSX, while .debug() will draw the underlying shader graphs.

I've defined a good set of basic primitives and iterated on them a few times. But how to implement it, when WebGL doesn't even fully cover OpenGL ES 2?

- MathBox² - PowerPoint Must Die

- A DOM for Robots - Modelling Live Data

- Yak Shading - Data Driven Geometry

- ShaderGraph 2 - Functional GLSL