On Headaches and Aspirin

"A computer is an educational device. It is in fact a direct reflection of your own imagination, your own intelligence. Once you're given the freedom in which to create things and see the immediate response on the screen, then it becomes a very enjoyable experience. You go on to involve yourself in many other things."

– Unknown Computer Store Manager, 1979

Sometimes, I look back at my many years of coding, and think: "Wow, it's so much easier now." Then I think about it some more.

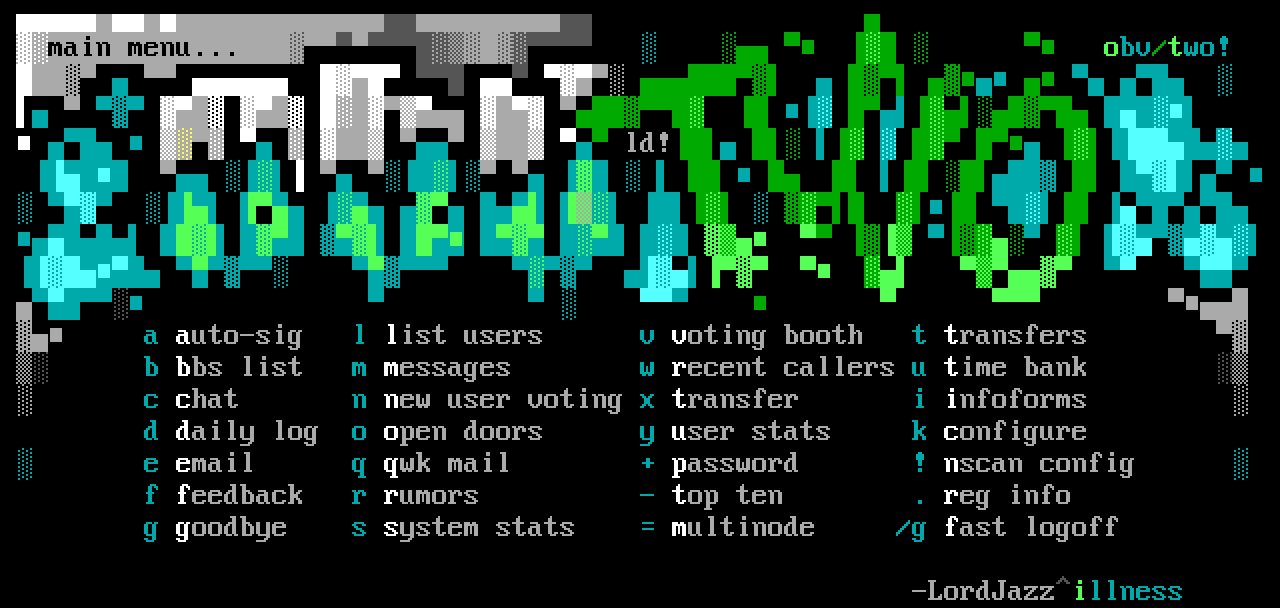

I got my start about 30 years ago. Back then, learning to code meant laboriously typing a few dozen lines of code from a book into a BASIC interpreter, hoping you hadn't made any typos. It was entirely up to you to demystify the bulk of these arcane runes. Access to good programming books was a luxury, certainly if you were far away from a university's comp sci department. A good day was when you found a random .txt file on a BBS or illicit CD-ROM, which taught you how to raytrace in between all the pirated games and rare pixelated erotica. Some people collected the titties, but I hoarded the .txts instead, like little ASCII treasures of revealed divine knowledge.

In fact, if you want a one sentence horror story for greybeard programmers, here it is: I had to learn C from a Win32 API book, it was all I had.

Meaning, for years I just assumed that all "serious" UI-related code was a giant dumpster fire. That it's perfectly normal to have to manage an arcane bureaucracy of structs just to do what every other normal application does every day. It's fine.

By comparison, today you can click just one link and have a fully functional live coding environment at your fingertips. You can find instant StackOverflow answers for ridiculously specific questions. You can even go look at the development process of some of the most successful projects out there, all in the open.

What's more, we have entered an age where programming is slowly but surely getting over its teenage infatuations. Functional programming has won. Lambdas and pure data are now topics you need to have mastered before you can call yourself properly senior. Though it'll take a while before that revolution is equally televised everywhere.

You'd think this would make things so much easier, but in many ways it doesn't. To understand why, let's talk about pedagogy and games.

The Way It's Always Been

Games are often seen as mere entertainment and distraction. I think this is incredibly short sighted. For one, play is not a mindless activity. It is a universal pastime enjoyed by humans everywhere. Many of our cousins in the animal kingdom engage in play as well, like our dogs and cats. It's mainly the children and young who do it, often with help and supervision of adults. We role-play an activity in a simplified form, as practice for the real thing later. This suggests an innate connection between playing and learning.

The notion of fun is also essential. When something is fun, we experience it as inherently rewarding for its own sake. This is why adults continue to play games, even if they know them well. Games are made up of activities that we are naturally drawn to and enjoy doing.

Hide-and-seek is a game about exploring one's environment, about being stealthy, but also about finding what's hidden. It mimics the harsh predator-prey dynamic of nature: a game of cat and mouse. A person who's hiding must think about how someone else will perceive a situation. The person who is seeking must think about the difference between what they can see and what can be. What's more, players usually take turns, allowing them to experience and compare both sides. If you are better at hiding, you are also better at seeking, because you know what kinds of places make for attractive hiding spots. It also makes you better at finding Easter Eggs.

So play is a way of creating fun, and fun is what you have when you engage in activities that are fun. It's not a circular definition though, it just implies that nature has predisposed us to find certain things fun, if they might develop into useful skills later. Play evolved to help us hunt, gather, survive and thrive.

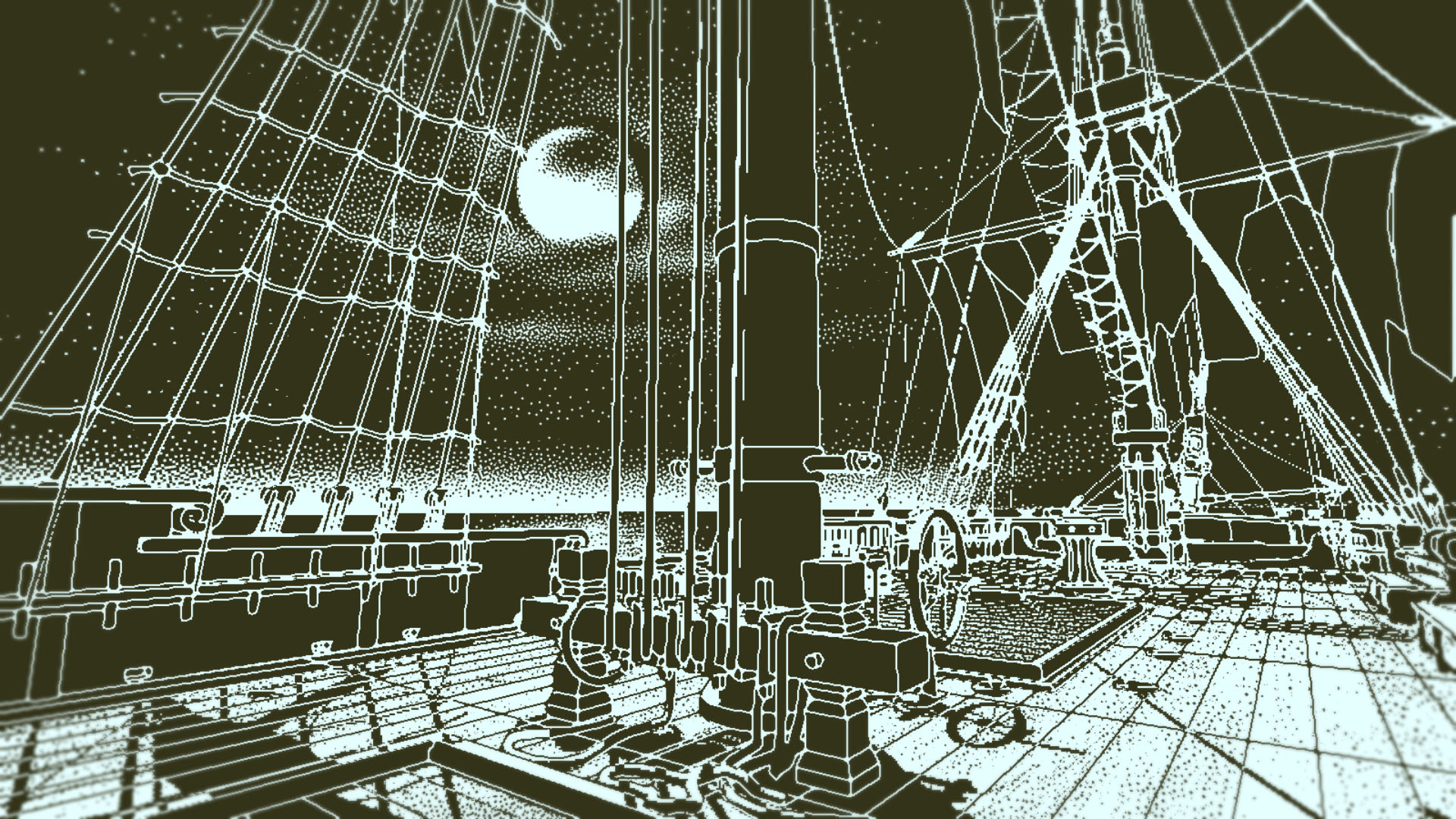

Return of the Obra Dinn, aka the best game of Clue ever

There is also a meta-level of fun: we don't just find it fun to investigate clues, to role-play characters, or to collect specific treasures; we also find it fun in general to practice, to compete, to mingle and to be rewarded, regardless of the specific activity. One model of this comes from Richard Bartle, who investigated early online MUDs (i.e. text-based mumorpugers from the late 80s). He identified 4 archetypes of players: Explorers, Killers, Socializers and Achievers. Which he summarized as a deck of cards:

Achievers are Diamonds: they're always seeking treasure.

Explorers are Spades: they dig around for information.

Socialisers are Hearts: they empathise with other players.

Killers are Clubs: they hit people with them.

In MUDs, it became clear that each player gravitated towards a preferred play style. The specific activities in the game defined the audience that would find the game most enjoyable, and hence stick with it. Tune it too much for one player's style, and you'd turn away everyone else.

We don't just have an innate sense of fun, but we also have individual tastes in fun.

It's Not Real, Mom

When we talk about games, we tend to talk about the fantasy it's imitating rather than the literal actions at hand. Kids playing cowboys-and-indians just point their hands at each other and say "bang," but they'll vividly tell the tale of how they killed each other.

Arguably this disconnect between fantasy and reality is nowhere larger than in video games, where the same handful of buttons on a controller are used to build, fight, roam, trade, craft, and even to love. However, the complexity of a game world is such that the player's agency extends into the game in a very direct way. It provides real feedback based on a crude approximation of the same rules that govern reality. As such, playing computer games is not inherently a waste of time, a fact that took parents a long while to get used to.

Today we take it for granted, but this instant feedback loop between a person and a simulated system was groundbreaking when it first appeared. Computer enthusiasts were often entranced, and called "computer freaks," even by themselves. Computers provided an irresistible super-stimulus to those predisposed to it.

(Originally sampled from this documentary)

The super-stimulus aspect is also revealed in the evolution of video games. Early games were limited in scope, often based directly on board games. As our ability to simulate grew however, we saw the birth of adventures, platformers, racers, flight sims, shooters, role playing, and so on. These are games that would be impractical to simulate with pen and paper, or which would risk life threatening injury if played IRL.

Over the subsequent decades, these raw alchemical ingredients would be refined and blended together. Looking Glass' Thief turned the formulaic first-person-shooter on its head, with an environment entirely designed around avoiding engagement and staying in the shadows. Its cousin Deus Ex instead blended the FPS with an RPG-like character system, allowing you to evolve your abilities to match your preferred play style. Each level became a puzzle box to be unlocked in a myriad of orthogonal ways, stealthy or otherwise, rather than just a set of doors requiring a specific set of keys.

Multiplayer enabled even more convergence. World of Warcraft took the fantasy RPG and made it into a cooperative team sport. Minecraft was the birth of an uber-genre, which combined resource gathering and crafting (i.e. Civilization), with exploration and combat (i.e. Doom). Online play provided a social layer, turning these disparate mechanics into a fully integrated economy. A survival game is thus a vertical slice of life itself. Its core game loop mirrors the human routine: you work, so you can eat, so you can enjoy hobbies, in good company. Which some people really played to win.

The success of these formulas is easy to see. WoW addiction became a real thing, sometimes jeopardizing whole semesters at a time. Minecraft made Notch a billionaire.

There is also another gaming genre that has unquestionably swept the world: social media. Its currency however is not Gold or Diamonds, but rather Status. It too provides super-stimulus mechanics: resource gathering of likes and followers, crafting poasts and memes, exploring your network, and fighting with the other tribes.

The success of the social formula has caused it to bleed into every other aspect of our lives. Teenagers hang out and socialize online, glued to their phones, consuming stories and streams by the truckload. Workplace communication has shifted away from email into gamified Slacks and other live walled gardens. Global politics has been temporarily redefined to center on the Orange Man, by the cabal of Twitter addicts who hate-follow him and amplify his every move.

We Are Explorers

What does any of this have to do with learning to code? I'd like you to consider programming itself as a video game, and analyze it in this light.

A crucial aspect of game design is the concept of Problem-Solution ordering. Put more simply: you can't teach people what aspirin is for until they've had a headache. If a player trips over and picks up a key on the way to a locked door, it's likely they'll unlock the door without realizing that's what actually happened: they just pressed X by the door, and it opened.

Despite having "passed the test," they may not understand what keys are for, or what to do when they encounter a door that won't immediately open like magic. This is a real problem, and this is why good games are designed so that you always find the locked door first and have to turn around. It naturally applies to metaphorical keys and doors too, to any learnable mechanic really.

The programming version of doing this wrong is called a Monad Tutorial. It doesn't matter if it's a burrito or a "monoid in the category of endofunctors," if you've never felt the pain of writing code without it. Start there instead.

The code that us greybeards cut our teeth on was a highly specific niche. It required pioneers not afraid to roll up their sleeves and go at it on their own. It took a lot of curiosity and effort to reverse engineer it all and get good. It was a lonesome pursuit, practiced by a subculture that only knew itself by faceless handles passing in the night. It was also eminently competitive, because the only way to stand out among the nicknames was to be good.

Today's programming environment is entirely different. It discourages originality by riding on the coattails of package repositories we trust far too much. It discourages curiosity by wrapping everything in opaque layers nobody wants to trace through, but everyone must use. It is a highly social pursuit, where quality is less important than reach and image. But it's not competitive at all, with large parts of our stacks now so tumorous only megacorps can even try to maintain them, and eventually give up on.

These are two different games, appealing to entirely different players. The kind of person who would've thrived in the hacker scene of old looks at the new world and simply goes: "this is not for me." A world where all the answers have already been provided is one where exploration is only for the elders. A world where everything is shielded off is one where you can't make the bad mistakes you need to learn from. A world where clout is more important than correctness is one where sociopaths make the rules. A world without merit-based competition is one where every effort is equally valid and hence equally worthless.

To be blunt, the modern programming world we've created for ourselves is optimized for the sheepish and incurious. In terms of gaming archetypes it appeals to Status-Achieving Socializers who don't mind Killing. It also means we have a giant pile of code out there that our replacements seem entirely ill-suited for maintaining, let alone evolving.

However I'm not trying to throw out the baby with the bathwater: I won't deny the value in platforms like Github or NPM. I want to point out instead that they are entirely the wrong on-ramp for teaching people to create new kinds of Githubs, NPMs, or other entirely new things. The games we have adopted for that purpose appeal to entirely the wrong kind of player.

We have an enormous amount of CRUD to clean up, and very few willing souls eager to learn how to do it. The organizations that should be leading those efforts are busy doing other things. Like cranking out copy/pasters in coding schools. Or putting the people who came up with 52 genders in charge of naming things, the absolute nutters.

We won't make software better by changing terminology arbitrarily. It's also not a more welcoming environment, if it turns off the freaks and geeks. That's only going to shift the focus away even more from the practices that built the internet and made it purr like a kitten. Nor will this be helped by getting teenagers to watch more streams of other people doing things. Someone needs to teach the kids to actually roll up their own sleeves for a change.

When faced with an intolerable situation, you have three choices: Exit, Voice or Loyalty. But when you give a hacker three options, they look for a fourth one. Loyalty is not my style, so I'm choosing instead to oscillate strategically between the first two. This is properly called being a Lighthouse.

They help explorers come back home.