On Progress

The known unknown knowns we lost

When people think of George Orwell's 1984, what usually comes to mind is the orwellianism: a society in the grip of a dictatorial, oppressive regime which rewrote history daily as if it was a casual matter.

Not me though. For whatever reason, since reading it as a teenager, what has stuck was something different and more specific. Namely that as time went on, the quality of all goods, services and tools that people relied on got unquestionably worse. In the story, this happened slowly enough that many people didn't notice. Even if they did, there was little they could do about it, because this degradation happened across the board, and the population had no choice but to settle for the only available options.

I think about this a lot, because these days, I see it everywhere around me. What's more, if you talk and listen to seniors, you will realize they see even more of it, and it's not just nostalgia. Do you know what you don't know?

Chickens roost and sleep in trees

A Chicken in Every Pot

From before I was born, my parents have grown their own vegetables. We also had chickens to provide us with more eggs than we usually knew what to do with. The first dish I ever cooked was an omelette, and in our family, Friday was Egg Day, where everyone would fry their own, any way they liked.

As a result, I remain very picky about the eggs I buy. A fresh egg from a truly free range chicken has an unmistakeable quality: the yolk is rich and deep orange. Nothing like factory-farmed cage eggs, whose yolks are bright yellow, flavorless and quite frankly, unappetizing. Another thing that stands out is how long our eggs would keep in the fridge. Aside from the freshness, this is because an egg naturally has a coating to protect it, when it comes out of the chicken. By washing them aggressively, you destroy this coating, increasing spoilage.

The same goes for the chickens themselves. I learned at an early age what it looks like to chop a chicken's head off with a machete. I also learned that chicken is supposed to be a flavorful meat with a distinct taste. The idea that other things would "taste like chicken" seems preposterous from this point of view. Rather, it's that most of the chicken we eat simply does not taste like chicken anymore. Industrial chickens are raised in entirely artificial circumstances, unhealthy and constrained, and this has a noticeable effect on the development and taste of the animal.

Here's another thing. These days when I fry a piece of store-bought meat, even when it's not frozen, the pan usually fills up with a layer of water after a minute. I have to pour it out, so I can properly brown it at high temperature and avoid steaming it. That's because a lot of meat is now bulked up with water, so it weighs more at the point of sale. This is not normal. If the only exposure you have to meat is the kind that comes in a styrofoam tray wrapped in plastic, you are missing out, and not even realizing it.

For vegetables and fruit, there is a similar degradation. Take tomatoes, which naturally bruise easily. In order to make them more suitable for transport, industrial tomatoes have mainly been selected for toughness. This again correlates to more water content. But as a side effect, most tomatoes simply don't taste like proper tomatoes anymore. The flavor that most people now associate with e.g. sun-dried, heirloom tomatoes, is simply what tomatoes used to taste like. Rather than buying them fresh, you are often better off buying canned Italian Roma tomatoes, which didn't suffer quite the same fate. Italians know their tomatoes, even if they are non-native to the country and continent.

For berries, it's the same story. Our yard had several bushes, with blueberries and red berries, and my mom would make jam out of them every year. But on a good day we would just eat them straight from the bush. I can tell you, the ones I buy in the store simply don't taste as good.

There is another angle to this too: preparation. Driven by the desire to serve more customers more quickly, industrial cooks prefer dishes that are easy to assemble and quick to make. But many traditional dishes involve letting stews and sauces simmer for hours at a time in a single pot, developing deep flavors over time. This is simply not compatible with rapid, mass production. It implies that you need to prepare it all ahead of time, in sufficient quantities. When was the last time you ordered something at a chain, and were told they had run out for the day?

Hence these days, growing your own food, raising your own animals, and cooking your own meals is not just a choice about self-sufficiency. It's a choice to favor artisanal methods over mass-scale production, which strongly affects the result. It's a choice to favor varieties for taste rather than what packages, transports and sells easily. To favor methods that are more labor intensive, but which build upon decades, even centuries of experience.

It also echoes a time when the availability of particular foods was incredibly seasonal, and building up preserves for winter was a necessity. People often had to learn to make do with basic, unglamorous ingredients, and they succeeded anyway. Add to this the fact that many countries suffered severe shortages during World War II, which is traceable in the local cuisine, and you end up with a huge amount of accumulated knowledge about food that we're slowly but surely losing.

Life in Plastic

It's difficult now to imagine a world without plastic. The first true plastic, bakelite, was developed in 1907. Since then, chemistry has delivered countless synthetic materials. But it would take over half a century for plastic to become truly common-place. With our oceans now full of floating micro-plastics, affecting the food chain, this seems to have been a dubious choice.

When I look at pictures of households from the 1950s, one thing that stands out to me is the materials. There is far more wood, metal, glass and fabric than there is plastic. These are all heavier materials, but also, tougher. When they did use plastic, the designs often look far bulkier than a modern equivalent. What's also absent is faux-materials: there's no plastic that's been painted glossy to look like metal, or particle board made to look like real wood, or acrylic substituting for real glass.

The problem is simple: when exposed to the UV rays in sunlight, plastic will degrade and discolor. When exposed to strain and tension, tough plastic will crack instead of flex. Hence, when you replace a metal or wooden frame with a plastic one, a product's lifespan will suffer. When it breaks, you can't simply manufacture a replacement using an ordinary tool shop either. Without a 3D printer and highly detailed measurements, you're usually out of luck, because you need one highly specific, molded part, which is typically attached not via explicit screws, but simply held in place via glue or tension. This tension will guarantee that such a part will fail sooner than later.

In fact, I have this exact problem with my freezer. The outside of the door is hooked up to the inside with 4 plastic brackets, each covering a metal piece. The metal is fine. But one plastic piece has already cracked from repeated opening, and probably the temperature shifts haven't helped either. The best thing I could do is glue it back on, because it's practically impossible to obtain the exact replacement I need. Whoever designed this, they did not plan for it to be used more than a few years. For an essential household appliance, this is shameful. And yet it is normal.

Products simply used to have a much longer lifespan. They were built to last and were expected to last. When you bought an appliance, even a small one, it was an investment. Whatever gains were made by producing something that is lighter and easier to transport were undone by the fact that you will now be transporting and disposing of 2 or 3 of them in the same time you used to only need just one.

This is also a difference that you can only notice in the long term. In the short term, people will prefer the cheaper product, even if it's more expensive eventually. Hence, the long-lasting products are pushed out of the market, replaced with imitations that seem more modern and less resource intensive, but which are in fact the exact opposite.

The only way to counter this is if there are sufficient craftsmen and experts around who provide sufficient demand for the "real" thing. If those craftsmen retire without passing on their knowledge, the degradation sets in. Even if the knowledge is passed on, it's worthless if the tools and parts those craftsmen depend on disappear or lose their luster.

This isn't limited to plastic either. Even parts that are made out of metal can be produced in good or bad ways. When cheap alloys replace expensive ones, when tolerances are slowly eroded away down to zero, the result is undeniably inferior. Yet it's difficult to tell without a detailed breakdown of the manufacturing process.

A striking example comes in the form of the Dubai Lamp. These are LED lamps, made specifically for the Dubai market, through an exclusive deal. They're identical in design to the normal ones, except the Dubai Lamp has far more LED filaments: it's designed to be underpowered instead of running close to tolerance. As a result, these lamps last much longer instead of burning out quickly.

Invisible Software

Luckily, the real world still provides plenty of sanity checks. The above is relatively easy to explain, because it can be stated in terms of our primary senses. If food tastes different, if a product feels shoddy and breaks more quickly, it's easy to notice, if you know what to look for.

But one domain where this does not apply at all is software. The reason is simple: software operates so quickly, it's beyond our normal ability to fathom. The primary goal of interactive software is to provide seamless experiences that deliberately hide many layers of complexity. As long as it feels fast enough, it is fast enough, even if it's actually enormously wasteful.

What's more, there's a perverse incentive for software developers here. At a glance, software developers are the most productive when they use the fastest computers: they spend the least amount of time waiting for code to be validated and compiled. In fact, when Apple released the new M1, which was at least 50% faster than the previous generation—sometimes far more—many companies rushed out and bought new laptops for their entire staff, as if it was a no-brainer.

However this has a terrible knock-on effect. If a developer has a machine that's faster than the vast majority of their users, then they will be completely misinformed what the typical experience actually is. They may not even notice performance problems, because a delay is small enough on their machine so as to be unobtrusive. This is made worse by the fact that most developers work in artificial environments, on reduced data sets. They will rarely reach the full complexity of a real world workload, unless they specifically set up tests for that purpose, informed by a detailed understanding of their users' needs.

On a slower machine, in a more complicated scenario, performance will inevitably suffer. For this reason, I make it a point to do all my development on a machine that is several years out of date. It guarantees that if it's fast enough for me, it will be fast enough for everyone. It means I can usually spot problems with my own eyes, instead of needing detailed profiling and analysis to even realize.

This is obvious, yet very few people in our industry do so. They instead prefer to have the latest shiny toys, even if it only provides a temporary illusion of being faster.

Apple Powerbook G4 Titanium (2001)

Dysfunctional Cloud

Where this problem really gets bad is with cloud-based services. The experience you get depends on the speed of your internet connection. Most developers will do their work entirely on their own machine, in a zero-latency environment, which no actual end-user can experience. The way the software is developed prevents everyday problems from being noticed until it's too late, by design.

Only in a highly connected urban environment, with fiber-to-the-door, and very little latency to the data center, will a user experience anything remotely closely to that. In that case, cloud-based software can provide an extremely quick and snappy experience that rivals local software. If not, it's completely different.

There is another huge catch. Implicit in the notion of cloud-based software is that most of the processing happens on the server. This means that if you wish to support twice as many users, you need twice as much infrastructure, to handle twice as many requests. For traditional off-line software, this simply does not apply: every user brings their own computer to the table, and provides their own CPU, memory and storage capacity for what they need. No matter how you structure it, software that can work off-line will always be cheaper to scale to a large user base in the long run.

From this point of view, cloud-based software is a trap in design space. It looks attractive at the start, and it makes it easy to on-board users seamlessly. It also provides ample control to the creator, which can be turned into artificial scarcity, and be monetized. But once it takes off, you are committed to never-ending investments, which grow linearly with the size of your user-base.

This means a cloud-based developer will have a very strong incentive to minimize the amount of resources any individual user can consume, limiting what they can do.

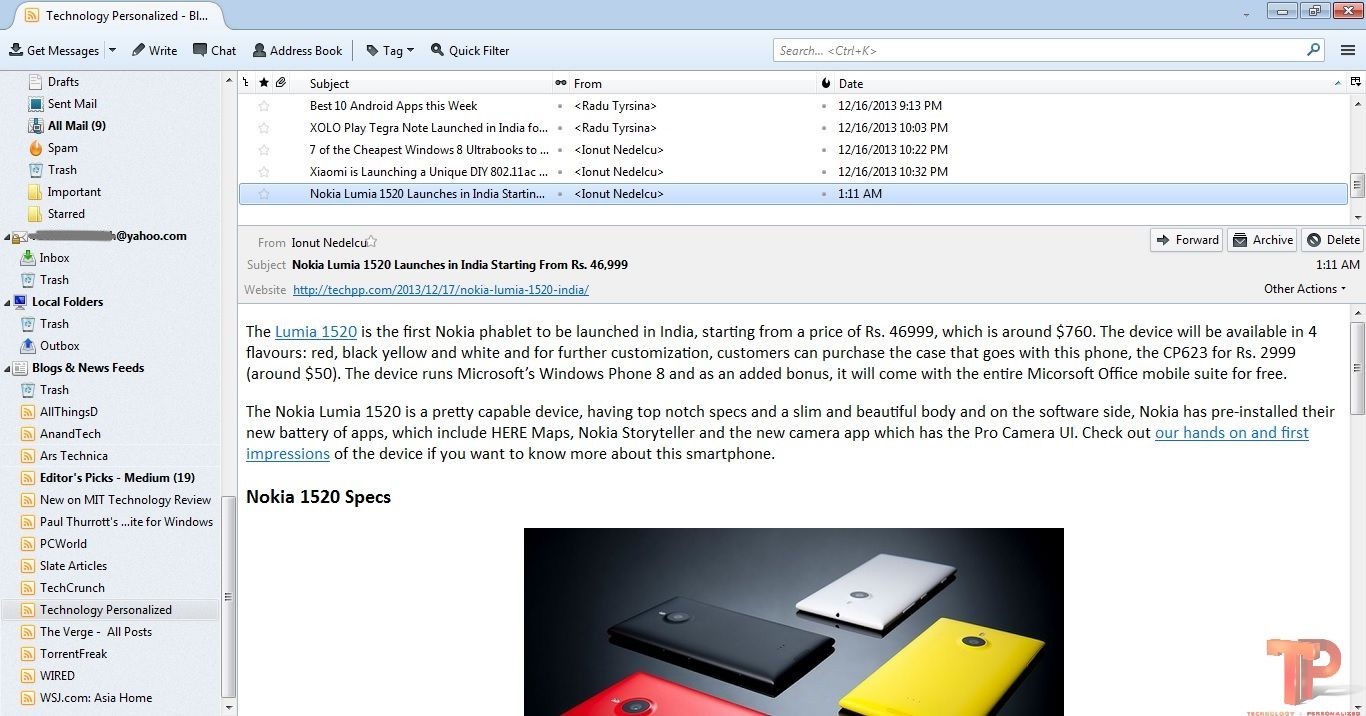

An obvious example is when you compare the experience of online e-mail vs offline e-mail. When using an online email client, you are typically limited to viewing one page of your inbox at a time, showing maybe 50 emails. If you need to find older messages, the primary way of doing so is via search; this search functionality has to be implemented on the server, indexed ahead of time, with little to no customization. There is also a functionality gap between the email itself and the attachments: the latter have to be downloaded and accessed separately.

In an offline email client, you simply have an endless inbox, which you can scroll through at will. You can search it whenever you want, even when not connected. And all the attachments are already there, and can be indexed by the OS' search mechanism. Even a cheap computer these days has ample resources to store and index decades worth of email and files.

Mozilla Thunderbird with integrated RSS

The New News

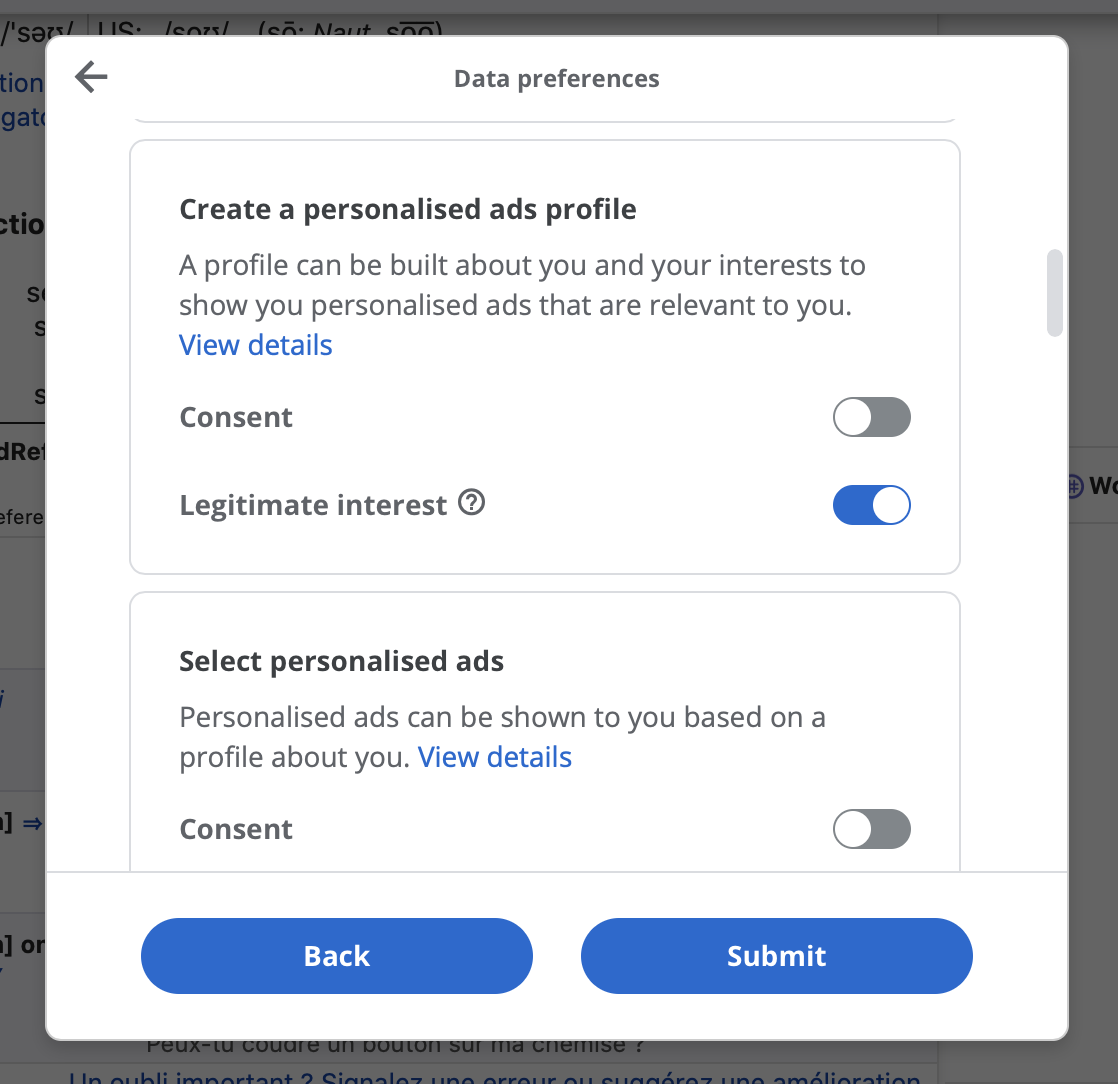

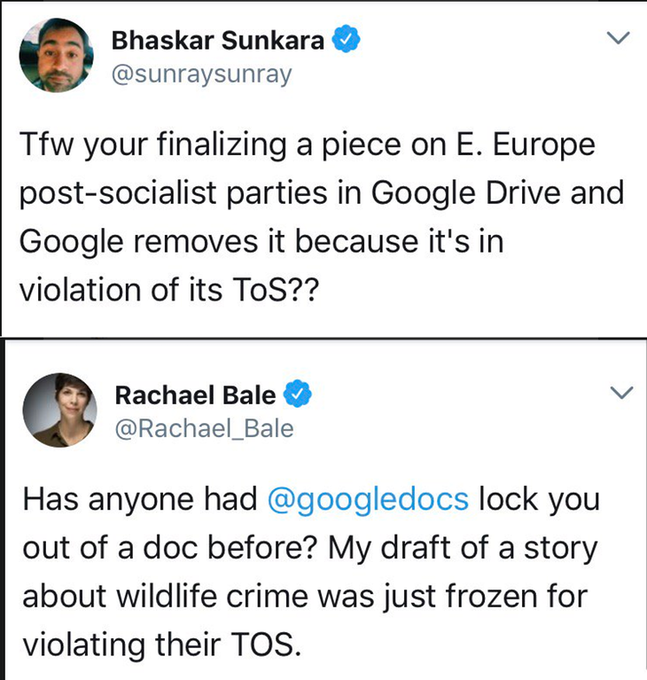

To illustrate the problems with monetization, you need only look at the average news site. To provide a source of income, they harvest data from their visitors, posting clickbait to attract them. But driven by GDPR and similar privacy laws, they now all have cookie dialogs, which make visiting such a site a miserable experience. As long as you keep rejecting cookies, you will keep having to reject cookies. Once you agree, you can no longer revoke consent. The geniuses who drafted such laws did not anticipate the obvious exception of letting sites set a single, non-identifiable "no" cookie, which would apply in perpetuity. Or likely they did, but it was lobbied out of consideration.

That's not all. In the early days of GDPR, these dialogs used to provide you with an actual choice, even if they did so reluctantly. But nowadays, even that has gone out of the window. Through the ridiculous concept of "legitimate interest", many now require you to explicitly object to fingerprinting and tracking, on a second panel which is buried. Simply clicking "Disagree" is not sufficient, because that button still means you agree to being "legitimately" tracked, for all the same purposes they used to need cookies for, including ad personalization. Fully objecting means manually unselecting half a dozen options with every visit, sometimes more.

The worst part is the excuse used to justify this: that newspapers have to make their money somehow. Yet this is a sham, because to my knowledge, no news site out there turns off the tracking for paying subscribers. You can pay to remove ads, but you can't pay to remove tracking. Why would they, when it's leaving money on the table, and fully legal? The resulting data sets are simply more valuable the more comprehensive they are.

In a different world, most people would do most of their reading via a subscription mechanism such as RSS. A social media client would be an aggregator that builds a feed from a variety of sources. Tracking users' interests would be difficult, because the act of reading is handled by local software.

Of course we can expect that in such a world, news sites would still try to use tracking pixels and other dubious tricks, but, as we have seen with email, remote images can be blocked, and it would at least give users a fighting chance to keep some of their privacy.

* * *

The conclusion seems obvious to me: the same kind of incentives that made industrial food what it is, and industrial manufacturing what it is, have made industrial software worse for everyone. And whereas web browsing used to be exactly that, browsing, it now means an active process where you are being tagged and tracked by software that spans a large chunk of the web, which makes the entire experience unquestionably worse.

The analogy is even stronger, because the news now seems equally bland and tasteless as the tomatoes most of us buy. The lore of RSS and distributed protocols has mostly been lost, and many software developers do not have the skills necessary to make off-line software a success in a connected world. Indeed, very few even bother to try.

It has all happened gradually, just like in 1984, and each individual has little power to stop it, except through their own choices.

Under the guise of progress, we tend to assume that changes are for the better, that the economy drives processes towards greater efficiency and prosperity. Unfortunately it's a fairy tale, a story contradicted by experience and lore, and something we can all feel in our bones.

The solution is to adopt a long-term perspective, to weigh choices over time instead of for convenience, and to think very carefully about what you give up. When you let others control the terms of engagement, don't be surprised if under the cover of polite every-day business, they absolutely screw you over.